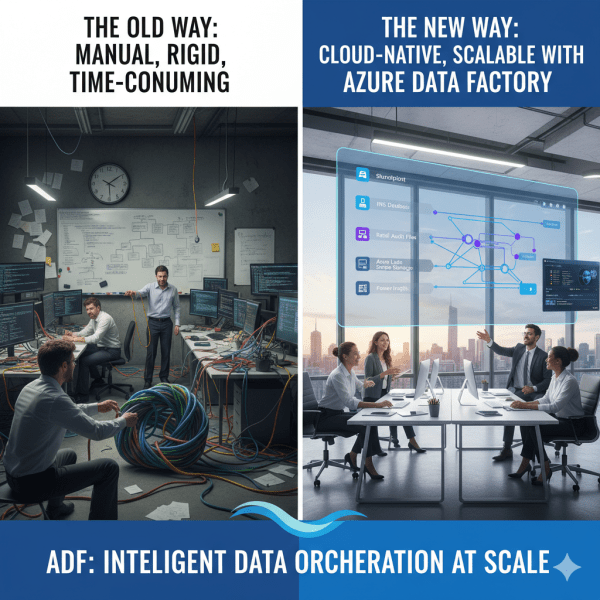

There was a time when moving data from multiple sources felt like untangling a giant knot. Every data refresh meant scripts breaking, manual checks, and long hours spent ensuring everything flowed from source to destination correctly. Then Azure Data Factory (ADF) entered the picture, and it didn’t just simplify ETL and ELT. It completely transformed how we think about data orchestration at scale.

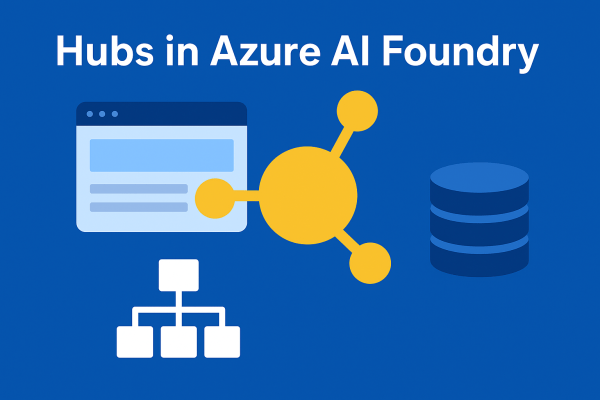

Hubs in Azure AI Foundry: The Nerve Center of Your AI Development

A hub is the foundation of Azure AI Foundry. Think of it as a control center where all the shared resources, security settings, and configurations for your AI development live. Without at least one hub, you cannot use the full power of Foundry’s solution development capabilities.

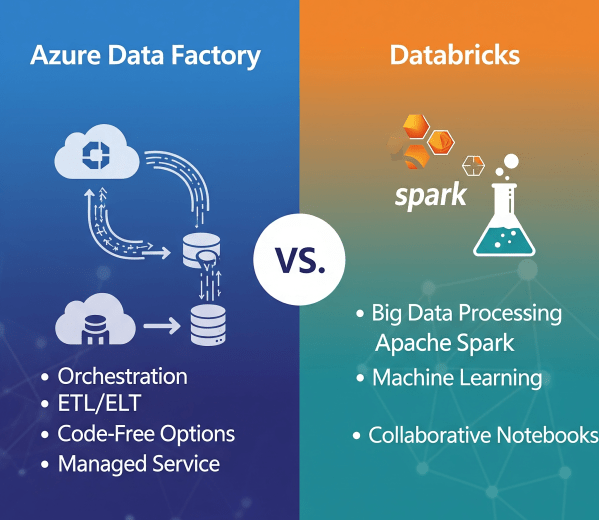

Azure Data Factory vs. Databricks: When to Use What?

In today’s cloud-first world, enterprises have no shortage of data services. But when it comes to building scalable, reliable data pipelines, two names often dominate the conversation: Azure Data Factory (ADF) and Azure Databricks.

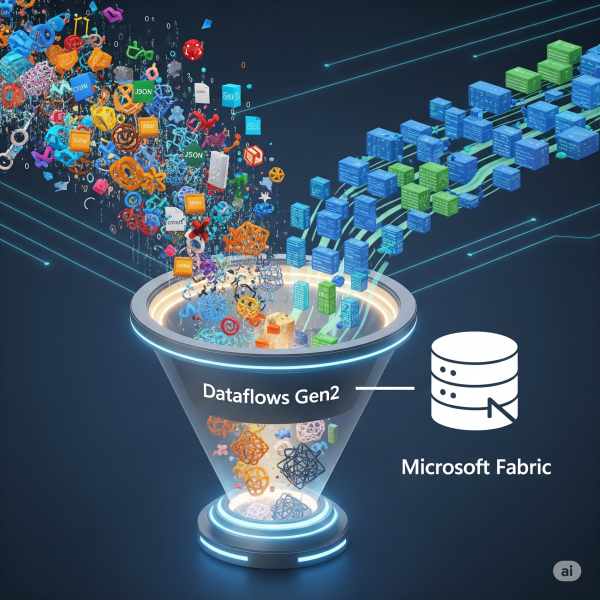

Transforming Data with Dataflows Gen2 in Microsoft Fabric

In Microsoft Fabric, raw data from multiple sources flows into the OneLake environment. But raw data isn’t always ready for analytics. It needs to be cleaned, reshaped, and enriched before it powers business intelligence, AI, or advanced analytics. That’s where Dataflows Gen2 come in. They let you prepare and transform data at scale inside Fabric, without needing heavy coding, while still integrating tightly with other Fabric workloads.

Ingesting Data with Data Factory in Microsoft Fabric

In Microsoft Fabric, Data Factory is the powerhouse behind that process. It’s the next generation of Azure Data Factory, built right into the Fabric platform; making it easier than ever to: - Connect to hundreds of data sources - Transform and clean data on the fly - Schedule and automate ingestion (without writing code)

Building a Lakehouse in Microsoft Fabric

A Lakehouse in Microsoft Fabric combines the scalability and flexibility of a data lake with the structure and performance of a data warehouse. It’s an all-in-one approach for storing, processing, and analyzing both structured and unstructured data.

How to Load Parquet Files from Azure Data Lake to Data Warehouse

By following these steps, you’ll be able to extract, transform, and load (ETL) your Parquet data into a structured data warehouse environment, enabling better analytics and reporting.

How to Copy Data from JSON to Parquet in Azure Data Lake

In this step-by-step guide, we’ll go through the exact process of creating Linked Services, defining datasets, and setting up a Copy Activity to seamlessly transfer your JSON data to Parquet format.