Microsoft Fabric brings together data engineering, data science, real-time analytics, and business intelligence into one unified platform. With so many capabilities available, organizations often ask: How do we get the most out of Fabric today while preparing for what’s coming next? This post shares practical performance tuning tips, cost optimization strategies, and a look at the Fabric roadmap based on the latest Microsoft updates.

Analyzing Data with Power BI in Microsoft Fabric

Data becomes valuable when it’s turned into insights that drive action. In Microsoft Fabric, this is where Power BI shines. By connecting directly to Lakehouses and Warehouses in Fabric, you can build interactive dashboards and reports, then publish and share them securely across your organization.

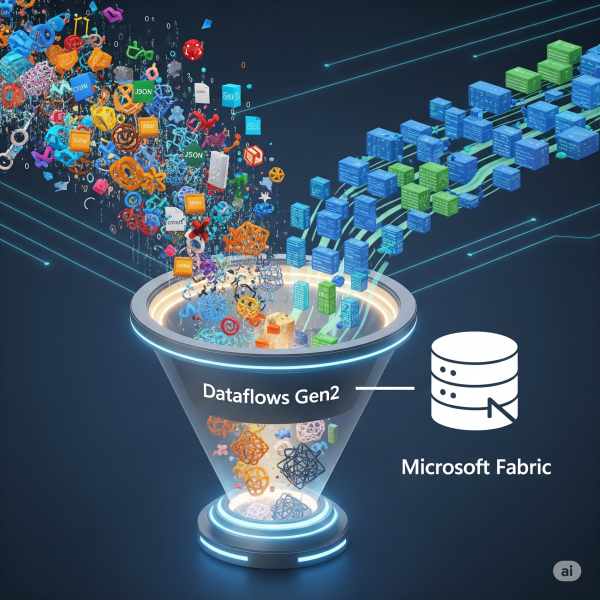

Transforming Data with Dataflows Gen2 in Microsoft Fabric

In Microsoft Fabric, raw data from multiple sources flows into the OneLake environment. But raw data isn’t always ready for analytics. It needs to be cleaned, reshaped, and enriched before it powers business intelligence, AI, or advanced analytics. That’s where Dataflows Gen2 come in. They let you prepare and transform data at scale inside Fabric, without needing heavy coding, while still integrating tightly with other Fabric workloads.

Ingesting Data with Data Factory in Microsoft Fabric

In Microsoft Fabric, Data Factory is the powerhouse behind that process. It’s the next generation of Azure Data Factory, built right into the Fabric platform; making it easier than ever to: - Connect to hundreds of data sources - Transform and clean data on the fly - Schedule and automate ingestion (without writing code)

Building a Lakehouse in Microsoft Fabric

A Lakehouse in Microsoft Fabric combines the scalability and flexibility of a data lake with the structure and performance of a data warehouse. It’s an all-in-one approach for storing, processing, and analyzing both structured and unstructured data.

What Is Microsoft Fabric and Why It Matters in 2025

In the last decade, data platforms have evolved from siloed solutions into fully integrated ecosystems. Microsoft Fabric is the latest and arguably boldest step in this evolution, bringing together data engineering, analytics, and governance into a single end-to-end SaaS platform.

Building Your First Azure Data Factory Pipeline: A Beginner’s Guide

Whether you're a data engineer, analyst, or developer stepping into the world of cloud-based data integration, Azure Data Factory (ADF) is a powerful tool worth mastering. It allows you to build robust, scalable data pipelines to move and transform data from various sources, all without managing infrastructure.

Python for Data Engineers: 5 Scripts You Must Know

As a data engineer, your job isn’t just about moving data. It’s about doing it reliably, efficiently, and repeatably, especially when working with cloud data platforms like Azure SQL Data Warehouse (Azure Synapse Analytics). Python is one of the best tools to automate workflows, clean data, and interact with Azure SQL DW seamlessly.

5 Career Skills Every Aspiring Data Engineer Needs

Over the past ten years in data, I’ve been fortunate to collaborate with, learn from, and help grow some incredibly talented engineering teams. One thing has become clear along the way: Tools come and go, but the core skills that make a great data engineer remain timeless. In today’s cloud-first, real-time, business-aligned world, here are the five skills I believe every aspiring data engineer must master.

How to Scale Up and Scale Down Dedicated SQL pool (SQL DW) using Azure Data factory.

Scaling up and scaling down your Azure Dedicated SQL Pool helps optimize both performance and costs.

How to Load Parquet Files from Azure Data Lake to Data Warehouse

By following these steps, you’ll be able to extract, transform, and load (ETL) your Parquet data into a structured data warehouse environment, enabling better analytics and reporting.

How to Refresh the Azure Analysis Service Model Using Azure Data Factory

This blog post will help you build an automated and effortless way to keep your Azure Analysis Services model up-to-date using Azure Data Factory, ensuring accurate insights with seamless refreshment.