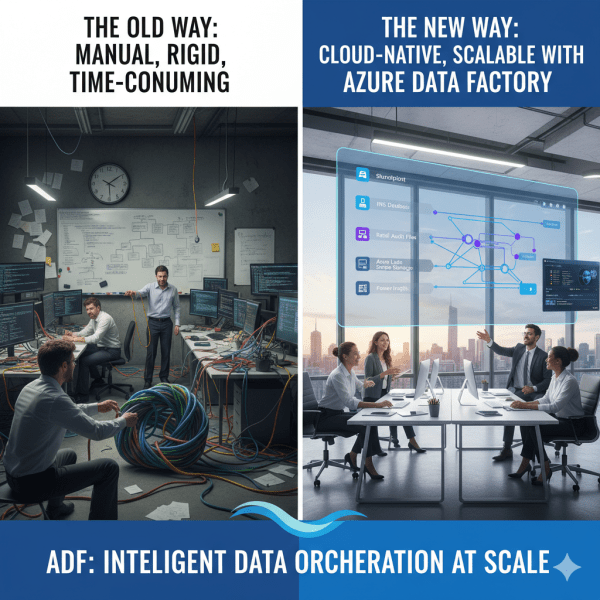

There was a time when moving data from multiple sources felt like untangling a giant knot. Every data refresh meant scripts breaking, manual checks, and long hours spent ensuring everything flowed from source to destination correctly. Then Azure Data Factory (ADF) entered the picture, and it didn’t just simplify ETL and ELT. It completely transformed how we think about data orchestration at scale.

Building Your First Azure Data Factory Pipeline: A Beginner’s Guide

Whether you're a data engineer, analyst, or developer stepping into the world of cloud-based data integration, Azure Data Factory (ADF) is a powerful tool worth mastering. It allows you to build robust, scalable data pipelines to move and transform data from various sources, all without managing infrastructure.

How to Load Parquet Files from Azure Data Lake to Data Warehouse

By following these steps, you’ll be able to extract, transform, and load (ETL) your Parquet data into a structured data warehouse environment, enabling better analytics and reporting.

How to Copy Data from JSON to Parquet in Azure Data Lake

In this step-by-step guide, we’ll go through the exact process of creating Linked Services, defining datasets, and setting up a Copy Activity to seamlessly transfer your JSON data to Parquet format.