When it comes to building a modern data platform in Azure, two technologies often spark debate: Microsoft Fabric and Databricks. Both are powerful. Both can process, transform, and analyze data. But they serve different purposes, and the smartest organizations know when to use each.

Microsoft Fabric Best Practices & Roadmap

Microsoft Fabric brings together data engineering, data science, real-time analytics, and business intelligence into one unified platform. With so many capabilities available, organizations often ask: How do we get the most out of Fabric today while preparing for what’s coming next? This post shares practical performance tuning tips, cost optimization strategies, and a look at the Fabric roadmap based on the latest Microsoft updates.

Governance & Security in Microsoft Fabric

As organizations adopt Microsoft Fabric to unify their data and analytics, ensuring governance and security becomes critical. Data is a strategic asset, and protecting it requires a mix of access controls, sensitivity labeling, and monitoring tools. Fabric brings these capabilities together so enterprises can innovate without sacrificing compliance.

Analyzing Data with Power BI in Microsoft Fabric

Data becomes valuable when it’s turned into insights that drive action. In Microsoft Fabric, this is where Power BI shines. By connecting directly to Lakehouses and Warehouses in Fabric, you can build interactive dashboards and reports, then publish and share them securely across your organization.

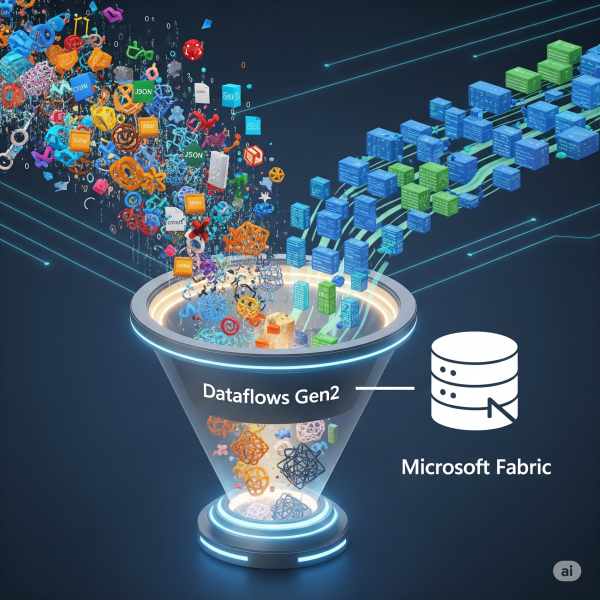

Transforming Data with Dataflows Gen2 in Microsoft Fabric

In Microsoft Fabric, raw data from multiple sources flows into the OneLake environment. But raw data isn’t always ready for analytics. It needs to be cleaned, reshaped, and enriched before it powers business intelligence, AI, or advanced analytics. That’s where Dataflows Gen2 come in. They let you prepare and transform data at scale inside Fabric, without needing heavy coding, while still integrating tightly with other Fabric workloads.

Ingesting Data with Data Factory in Microsoft Fabric

In Microsoft Fabric, Data Factory is the powerhouse behind that process. It’s the next generation of Azure Data Factory, built right into the Fabric platform; making it easier than ever to: - Connect to hundreds of data sources - Transform and clean data on the fly - Schedule and automate ingestion (without writing code)

Building a Lakehouse in Microsoft Fabric

A Lakehouse in Microsoft Fabric combines the scalability and flexibility of a data lake with the structure and performance of a data warehouse. It’s an all-in-one approach for storing, processing, and analyzing both structured and unstructured data.

Exploring OneLake: The Heart of Microsoft Fabric

OneLake is Microsoft Fabric’s built-in data lake, designed to store data in open formats like Delta Parquet and make it instantly available to all Fabric experiences (Lakehouse, Data Factory, Power BI, Real-Time Analytics).

Setting Up Your First Microsoft Fabric Workspace

If you’re starting with Microsoft Fabric, the first thing you’ll need is a workspace, it is a central hub where all data-related assets live. Think of it as your project’s headquarters: datasets, pipelines, Lakehouses, dashboards, and governance settings are all managed here.

What Is Microsoft Fabric and Why It Matters in 2025

In the last decade, data platforms have evolved from siloed solutions into fully integrated ecosystems. Microsoft Fabric is the latest and arguably boldest step in this evolution, bringing together data engineering, analytics, and governance into a single end-to-end SaaS platform.

Why “Good Enough” Data Isn’t Good Enough Anymore

For years, organizations have operated under the assumption that “good enough” data is… good enough. A few gaps here, a few duplicates there as long as dashboards work and reports run, why sweat the small stuff? That mindset might have worked in the past. But today, “good enough” data is no longer enough and holding onto that thinking could be quietly costing your business millions.

Demystifying Data Cataloguing: A Practical Guide for Modern Enterprises

In a world where data is everywhere but rarely well-understood, data cataloguing is becoming a critical pillar of enterprise data strategy. Whether you're a data engineer, analyst, or business leader, knowing what data you have, where it lives, and how to trust it is no longer a luxury, it’s a necessity.